Consequences of the COVID-19 tyranny: Turmoil on children’s mental health

12 May, 2022

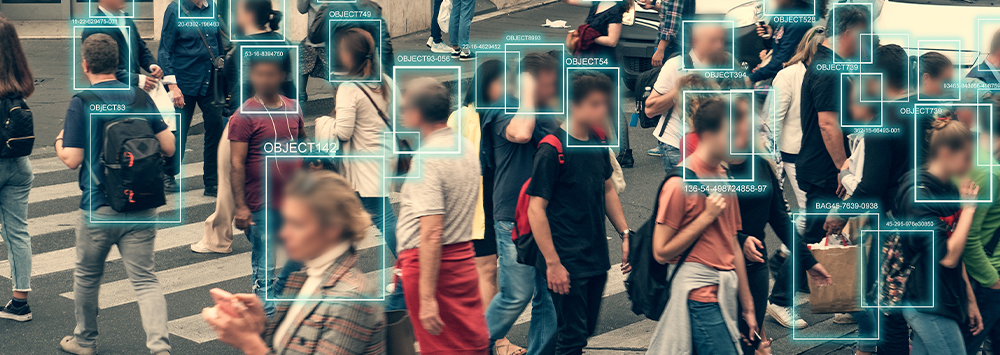

The perils of digital identity

31 May, 2022

23 May, 2022

Europe is working on a monumental project to modernize police forces and improve international cooperation between security offices. One of the issues of this Police Cooperation Code, framed within Prüm II and which is causing the most controversy, is the large-scale facial recognition system to be implemented. Prüm II is in turn an extension of the Prüm Treaty initially signed by 7 countries in 2005 for the automation of identity data exchange and the fight against terrorism and cross-border crime. Until now, police from various European states had at their disposal a vast file of fingerprints, genetic information, or car license plates which they could cross-reference with those of other forces to find a potential criminal. Now, the intention is to add millions of photos faces of "suspects and convicted criminals" to these systems. According to the European Commission, the measures aim to create a framework in which officers have a much easier time exchanging information with counterparts in other countries. The proposal allows one nation to compare a photo with another's files and find out if there are matches, creating one of the largest known facial recognition systems. The documents that served as the basis for early discussions regarding Prüm II revealed that there were already numerous photographs of faces in the digital stores of different countries: 30 million in the case of Hungary, 17 million in Italy or 6 million in France, signifying the size of the project. These images came from criminals, prisoners, and even unidentified corpses.

Lawyer and writer Ella Jakubowska, policy and campaign manager at European Digital Rights, an international non-profit association fighting for civil rights, advocates limiting biometric surveillance and denounces the fact that European authorities are choosing to double the number of facial recognition databases. Jakubovska is at the forefront of the Reclaim Your Face initiative, which calls for strict regulation of biometric technologies to prevent interference with fundamental rights. As the campaign statement says, governments and corporations will not hesitate to use facial recognition against us, invading our public space based on who we are or what we look like, with all the racist, classist and sexist implications this control could entail. Several studies are showing that facial recognition is much more likely to make mistakes when it comes to young people, women and dark-skinned individuals, which will lead to traumatic consequences.

In the wake of the Tottenham riots of 2011 in England, racist bias was instrumental in the creation of a database containing thousands of images of young black men, alleged to be part of organized gangs. The authorities' need to analyze a hypothetical threat, spurred by phobic arguments blaming the gangs for starting the demonstrations, led the police to store photographs of people who had nothing to do with those protests. Eventually it turned out that only a small portion of these people were connected to the riots and the police were forced to delete a significant portion of the files, known as GVM (Gang Violence Matrix).

Director of Salesforce's Ethical Artificial Intelligence division Yoav Schlesinger, says, "The reality is that if a government wants to use these systems to surveil us, it can now do so in an automated way and on a larger scale than has ever been possible before. If you're a white male it's easier to think of these kinds of technologies as contributing to your security, but if you're not in this group, things may be different."

A similar situation occurred in the Netherlands, with the elimination of more than 200,000 images of people who were once suspected or guilty of having committed a criminal act. These actions make us realize just how many images are in the hands of authorities and how the danger of misidentification is multiplied. Surveillance systems using closed-circuit cameras have been a common means of control for law enforcement agencies and their implementation has been at the center of the debate for years. Facial recognition, as envisaged in the new plans, combines tracking of photos on social networks or devices of the suspect with police files from these cameras, and is retrospective on top of it all. Jakubovska criticizes this retrospective factor and claims the damage could be even greater by analyzing images from 3 or 5 years ago, as it would potentially admit tracking the past contacts of a person who is currently anti-government or could surface that person's involvement in a protest. The European Commission stresses that image cross-checking will not be extended to the general population, being limited only to convicts or suspects. It also insists, referring to the guide that defines how the system will work, that the data will not be stored in a permanent central database, although there will be a "central router" that will act as a messaging broker and will prevent the police in each country from having to request the images from the individual bodies. The activist, however, is concerned about the possibility that this proposal opens up for the generalized creation of large image archives and the way in which algorithms will be applied to identify subjects.

What is the limit between protecting citizens from certain threats and respecting privacy? The old security versus freedom discussion is experiencing a new incarnation with the development of these large-scale facial recognition systems, the scope of which is still difficult to calculate.